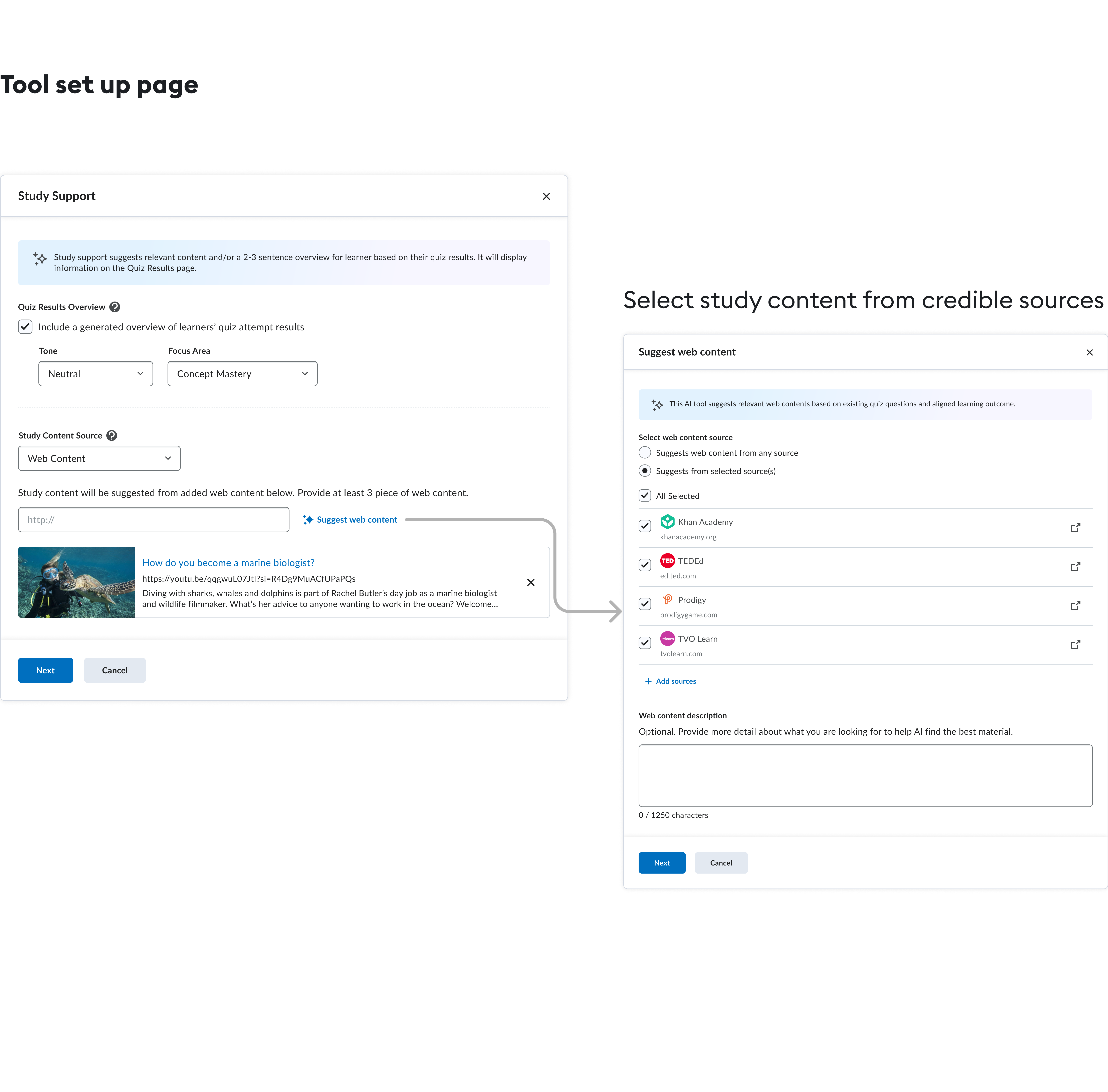

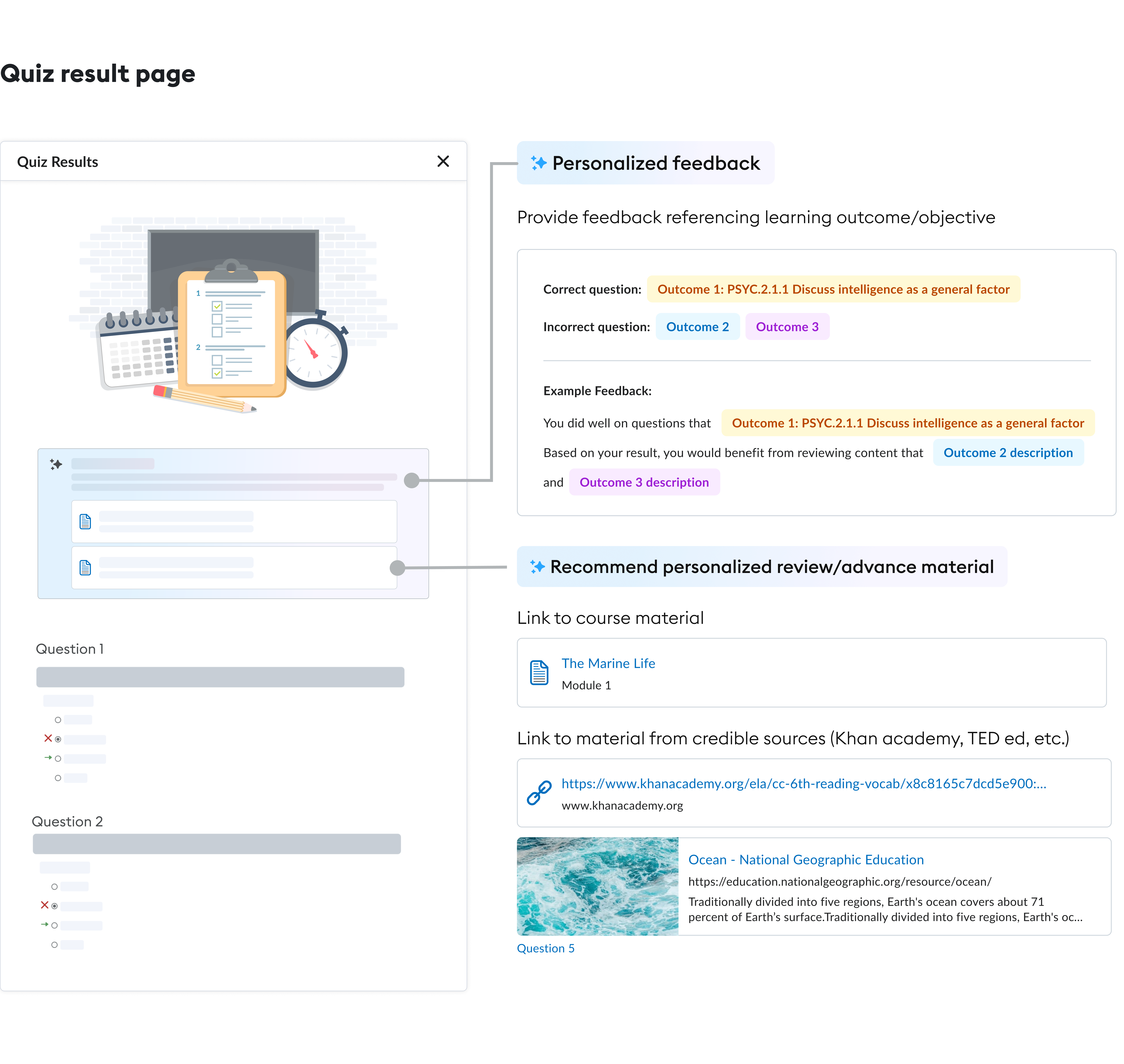

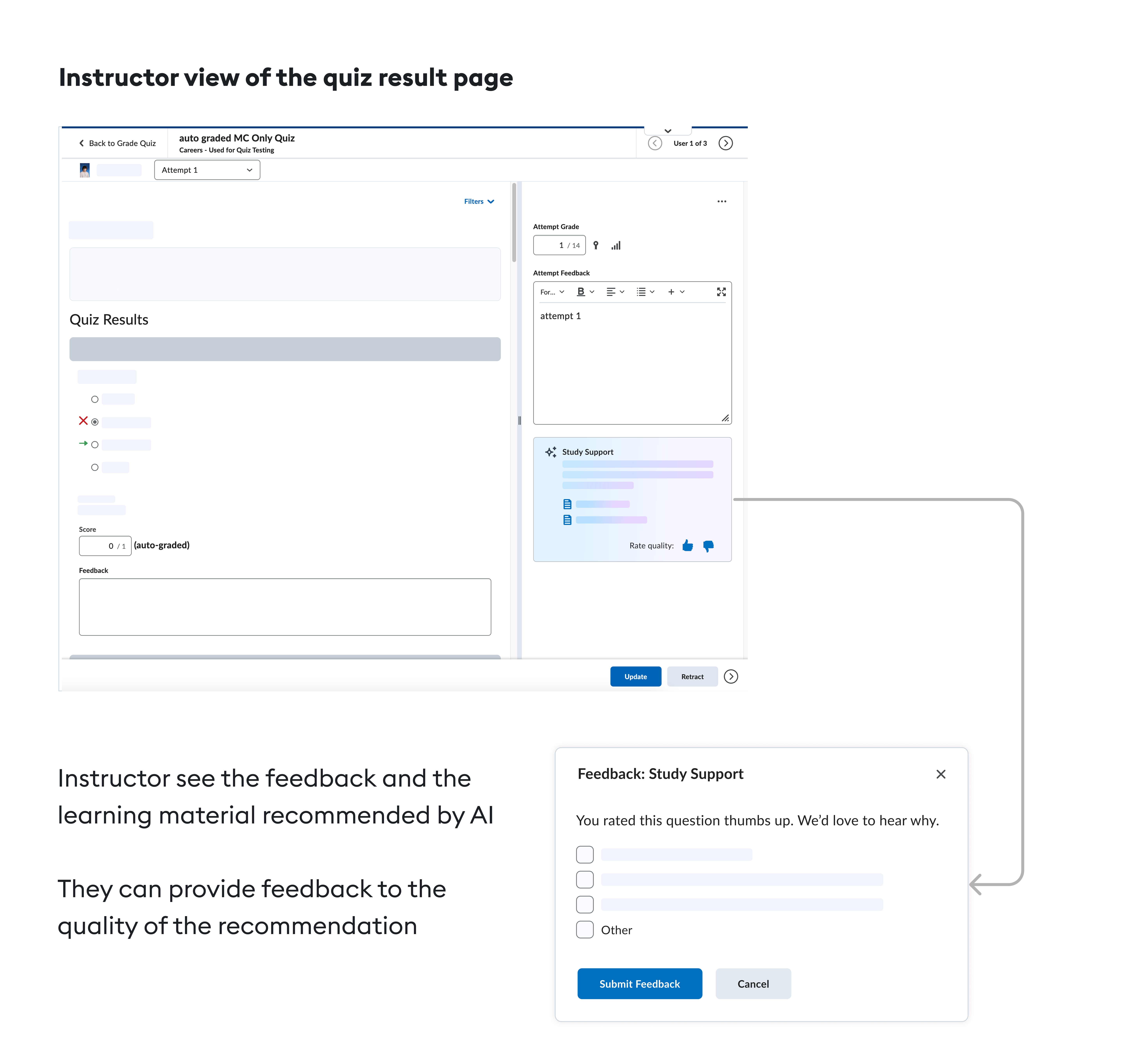

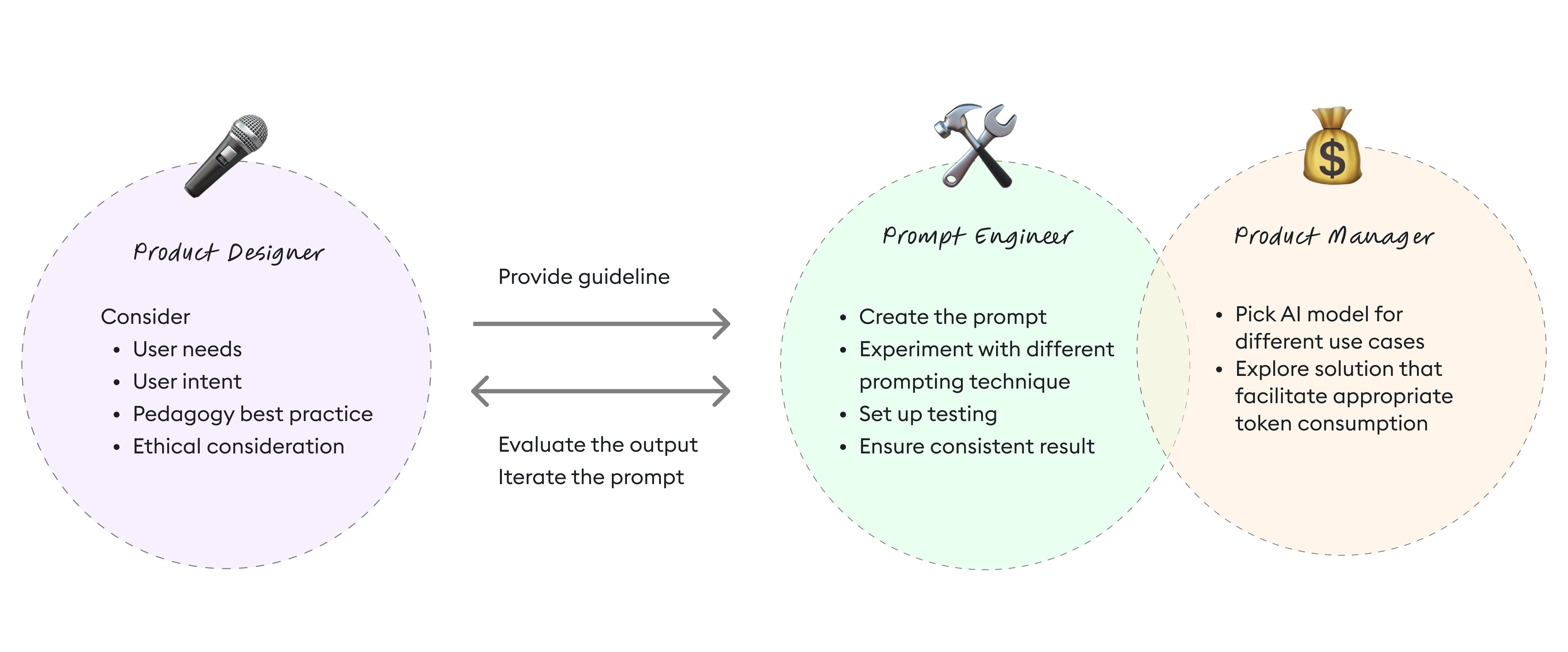

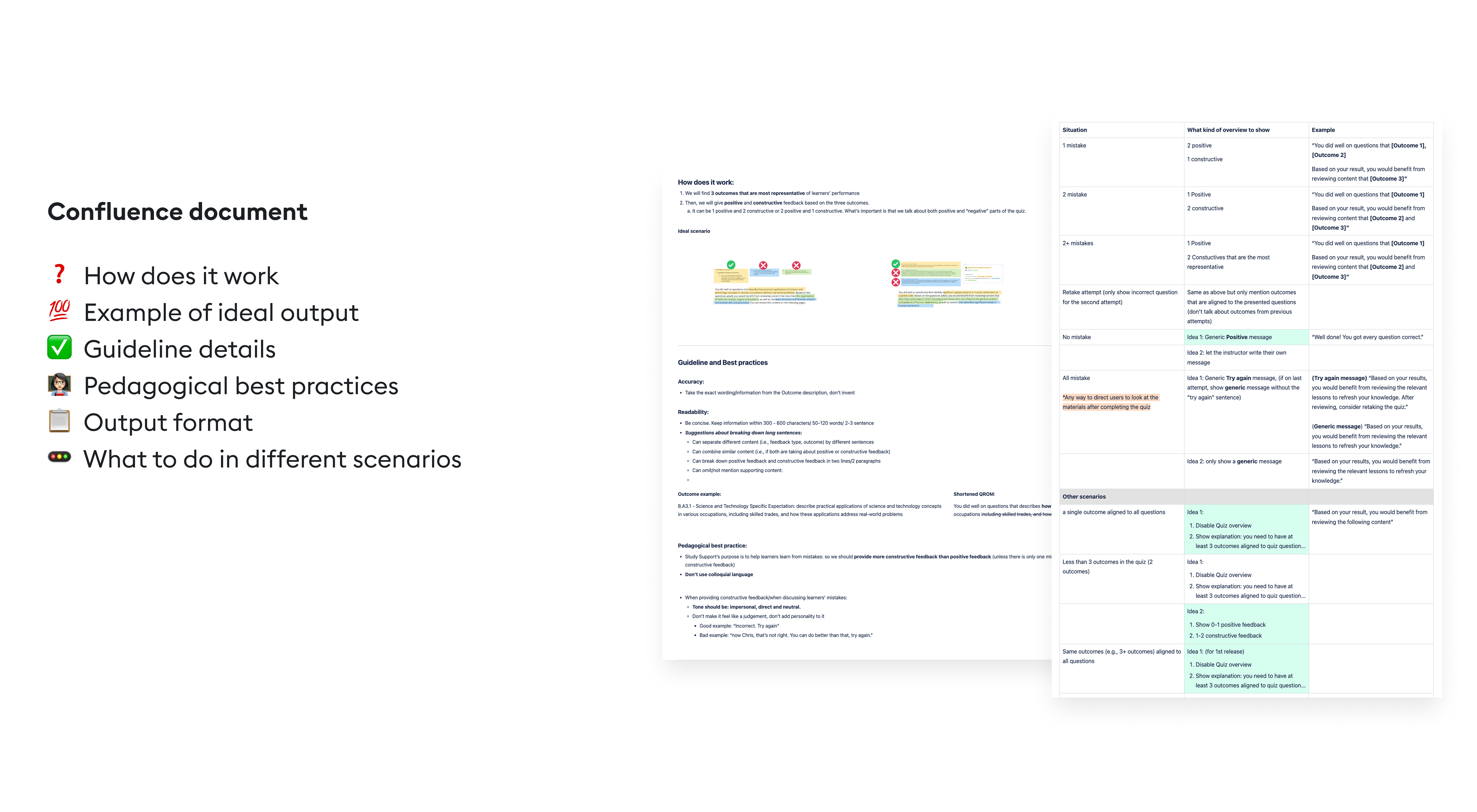

The designer (myself) considered user needs, intentions, pedagogy, and ethics, and provided a guideline to the Machine learning experts that informs the ideal result for different scenarios.

As results were generated, I worked closely with the team to evaluate whether the output met our intent, and then iterated repeatedly until it did.