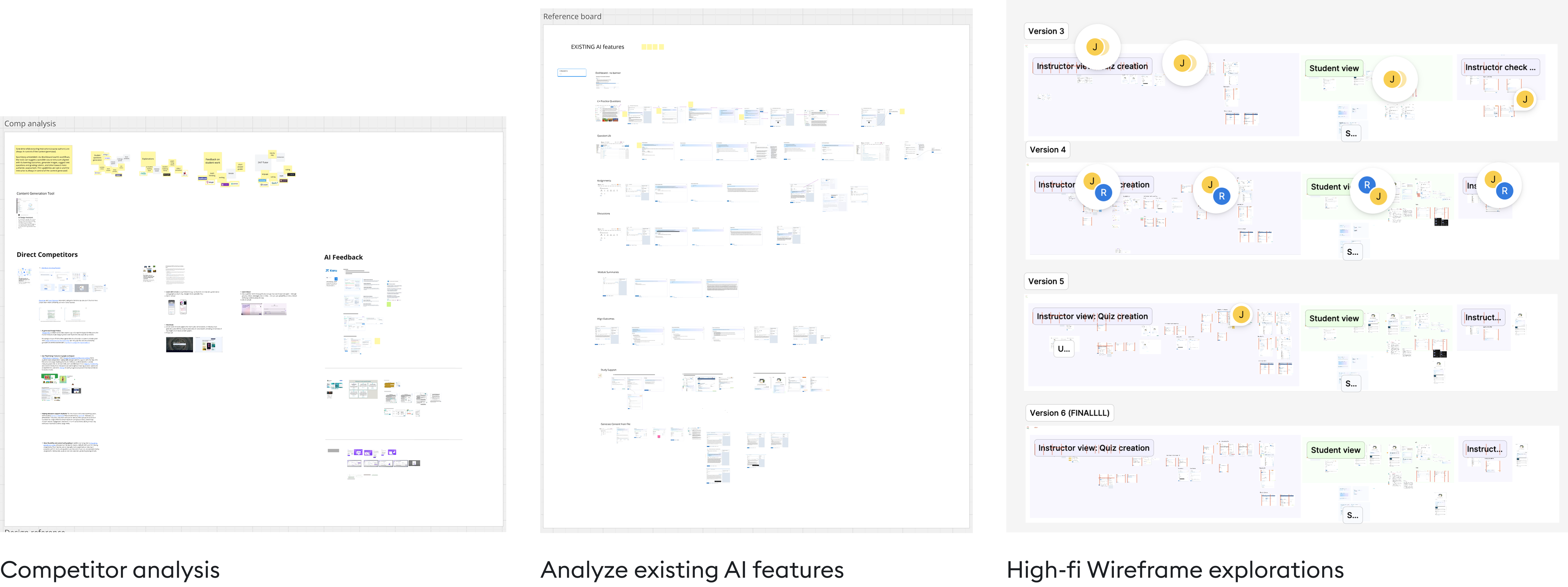

While analyzing the competitive landscape, I realized something crucial.

Yes, there were tons of learner-facing AI tools out there, some of them so advanced that its hard for us to catch up.

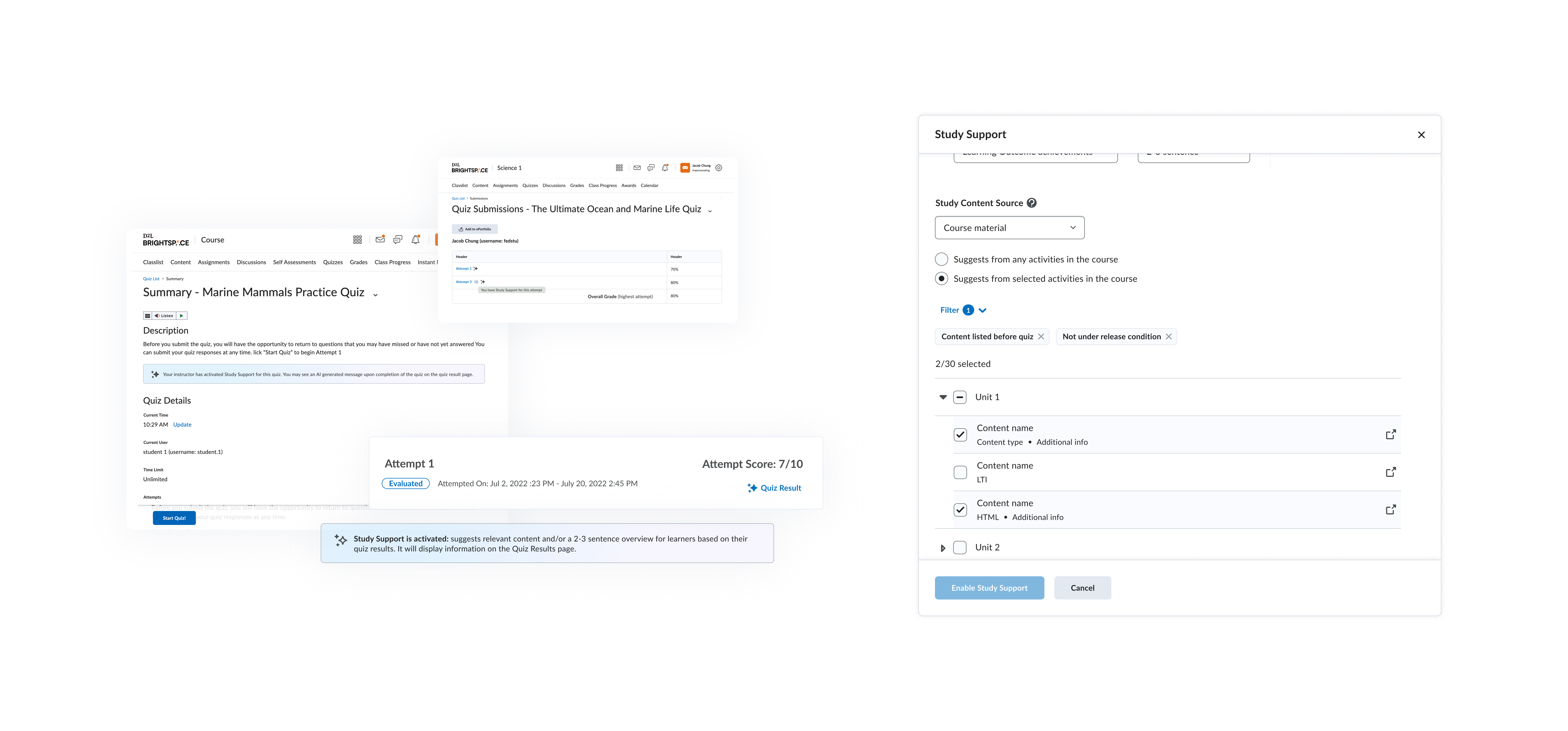

But they were all missing something fundamental: context about the student's actual performance and the instructor's specific content.

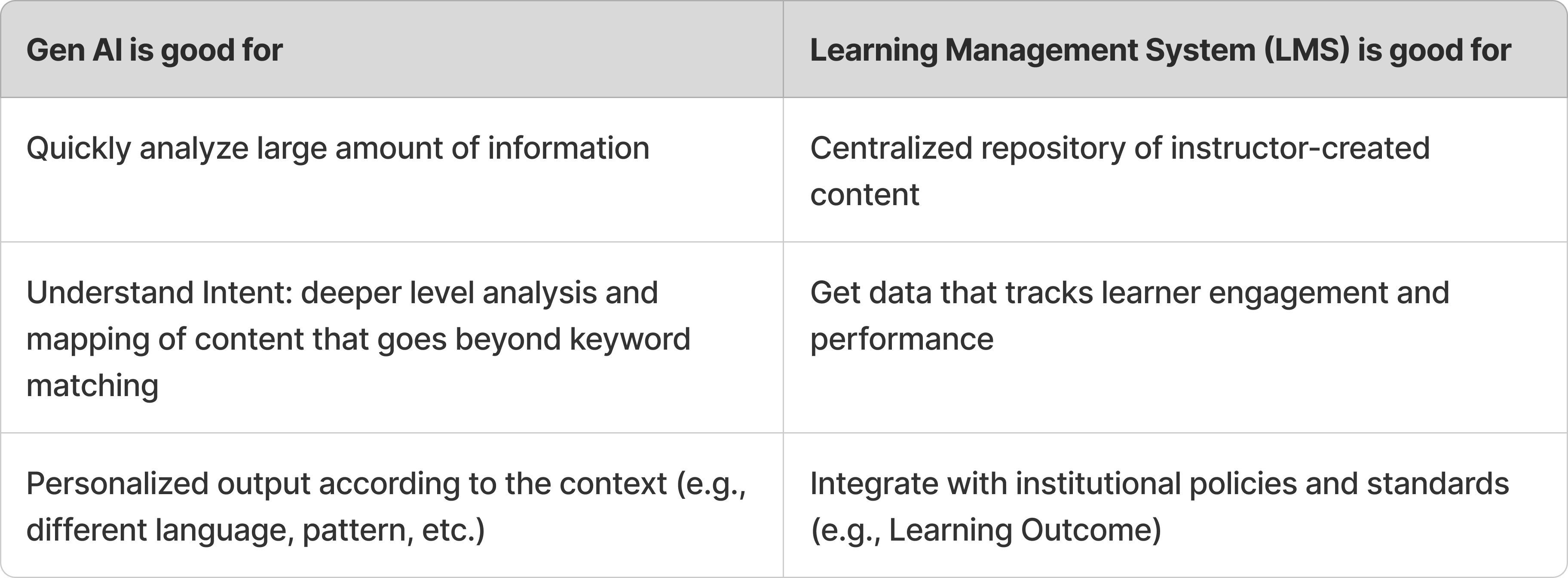

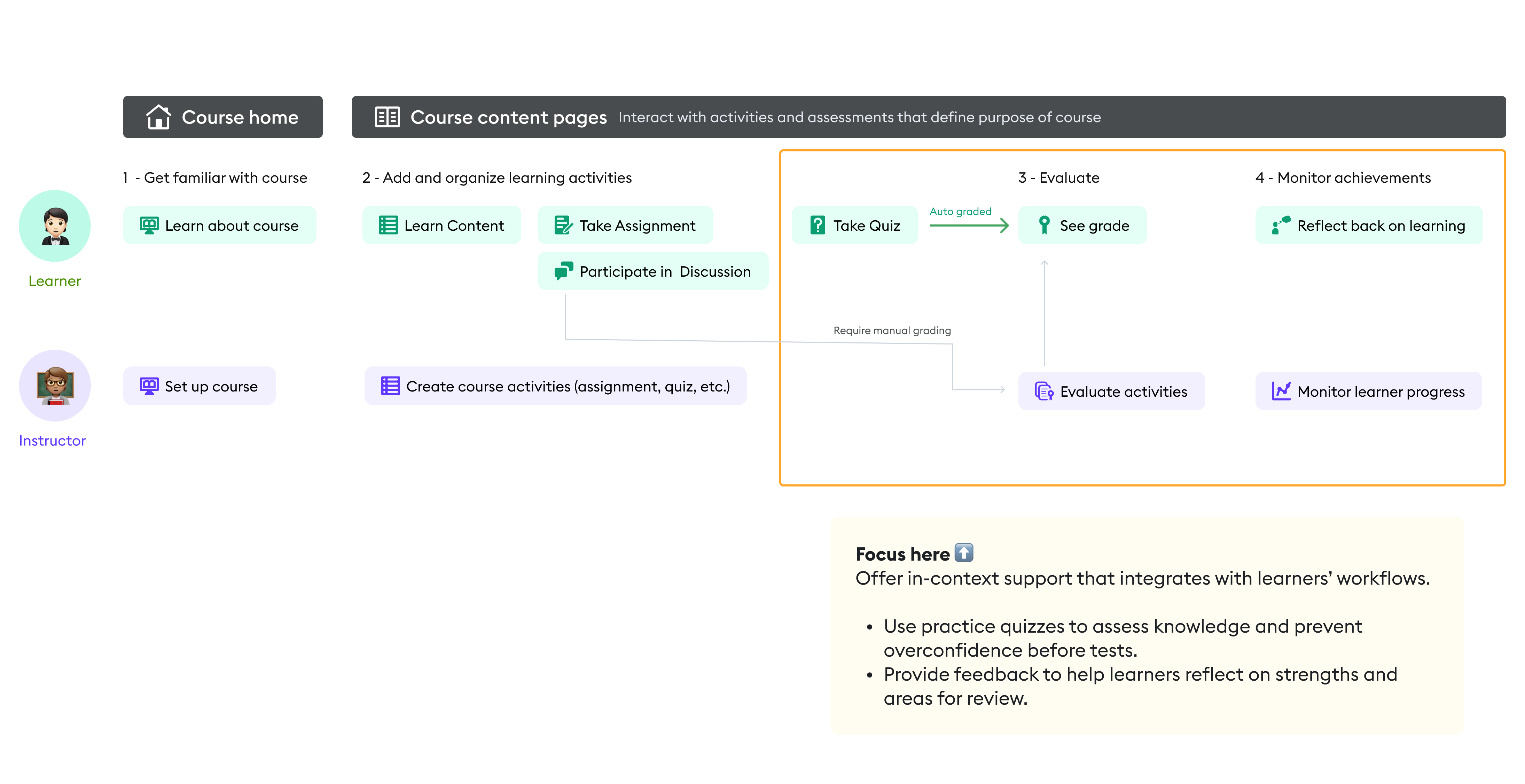

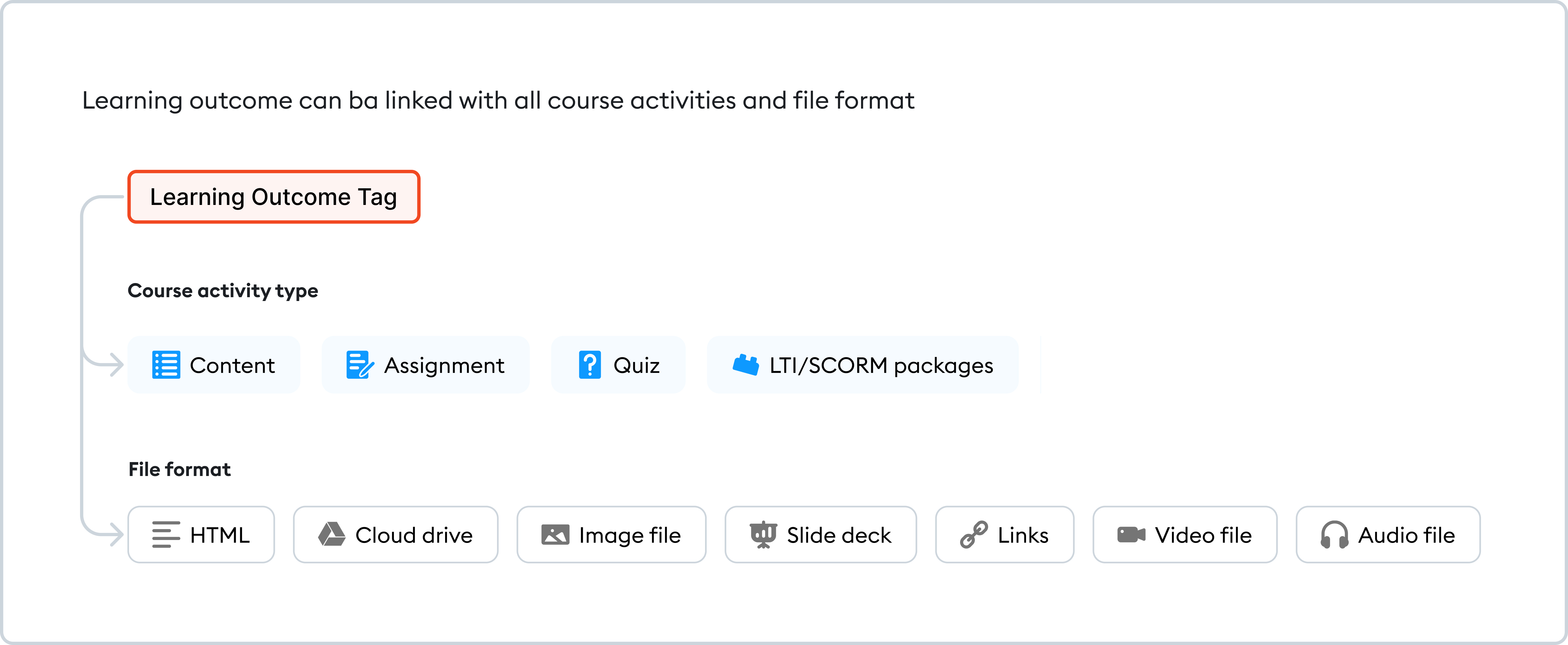

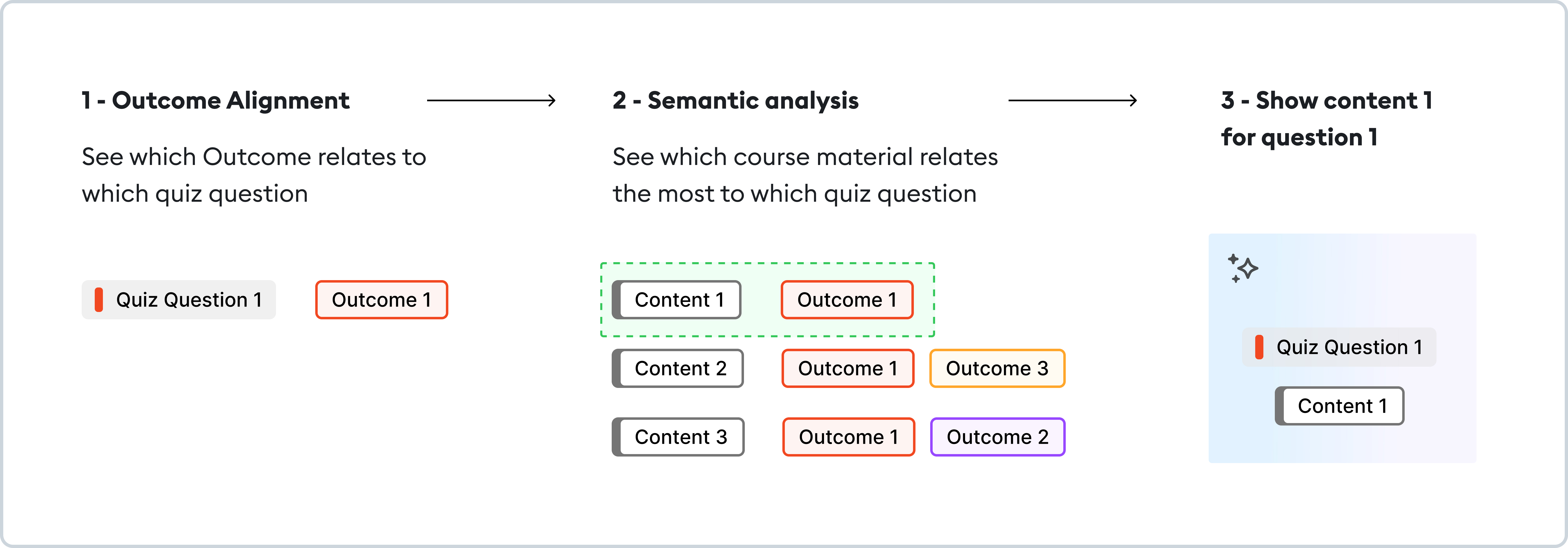

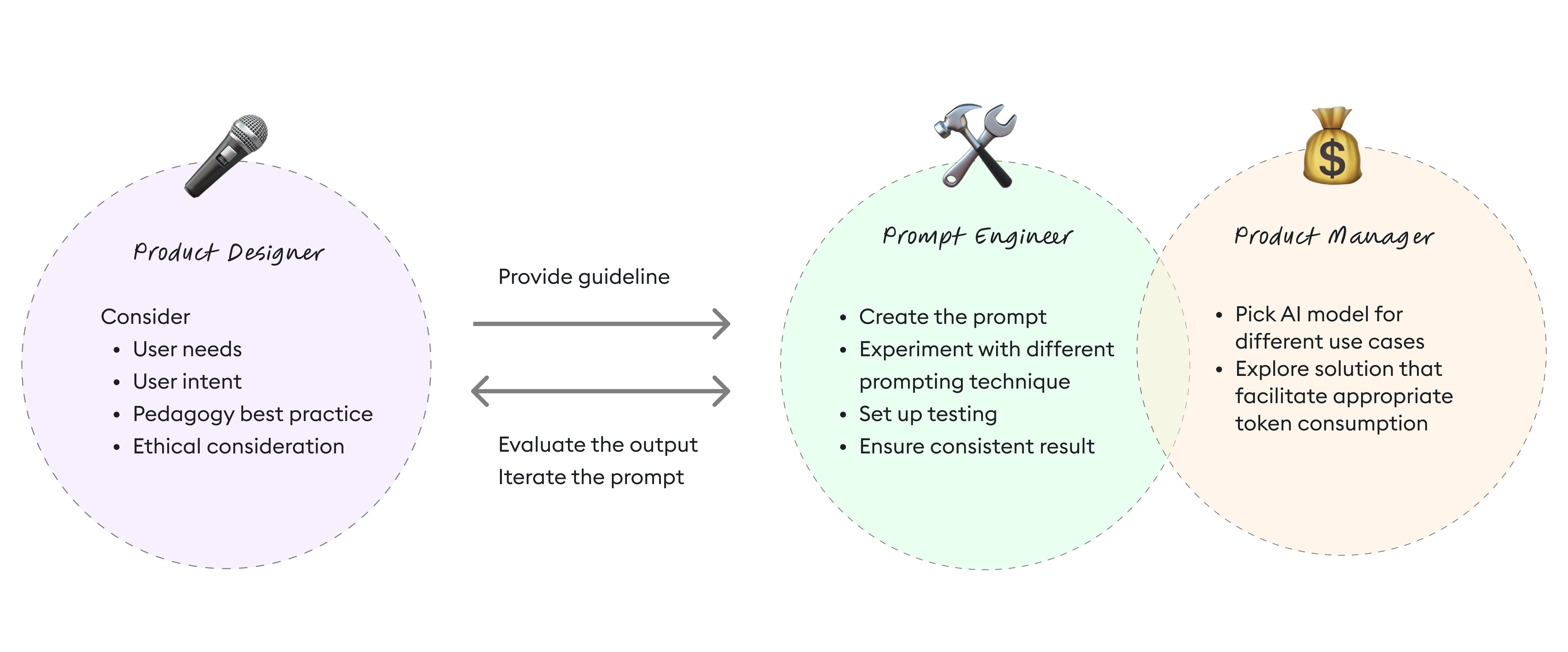

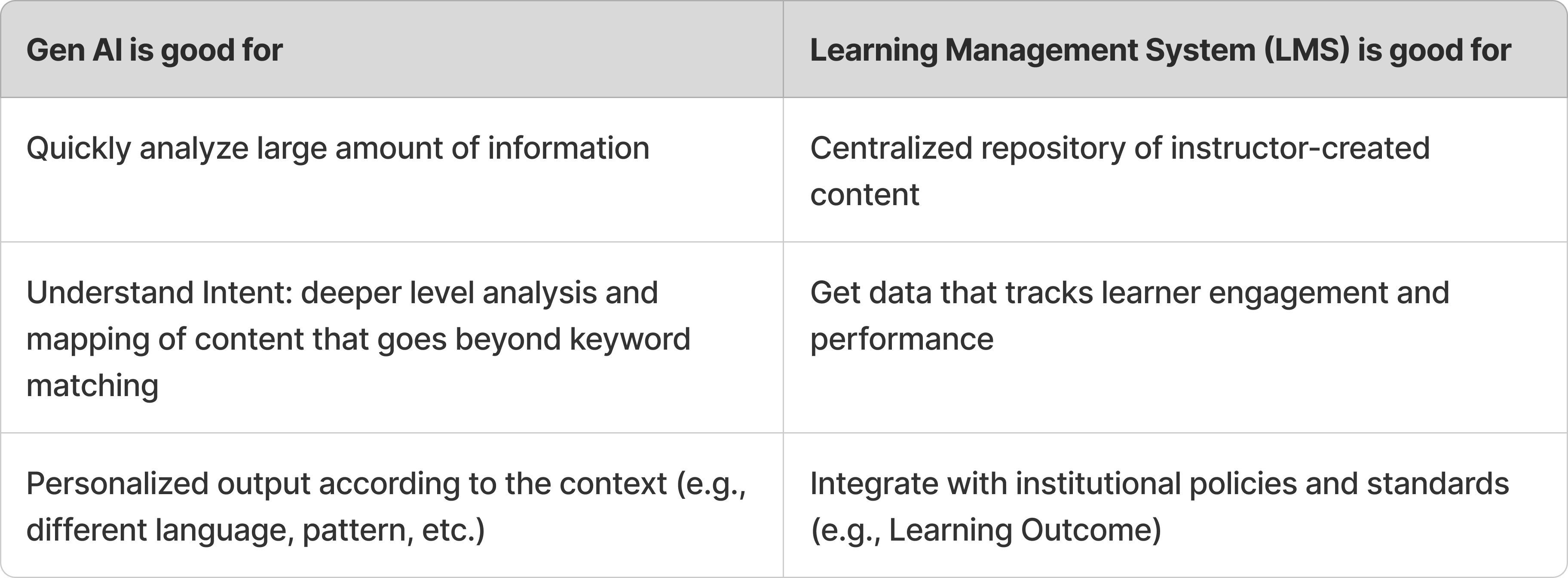

I started mapping what AI does well against what our Learning Management System uniquely offers:

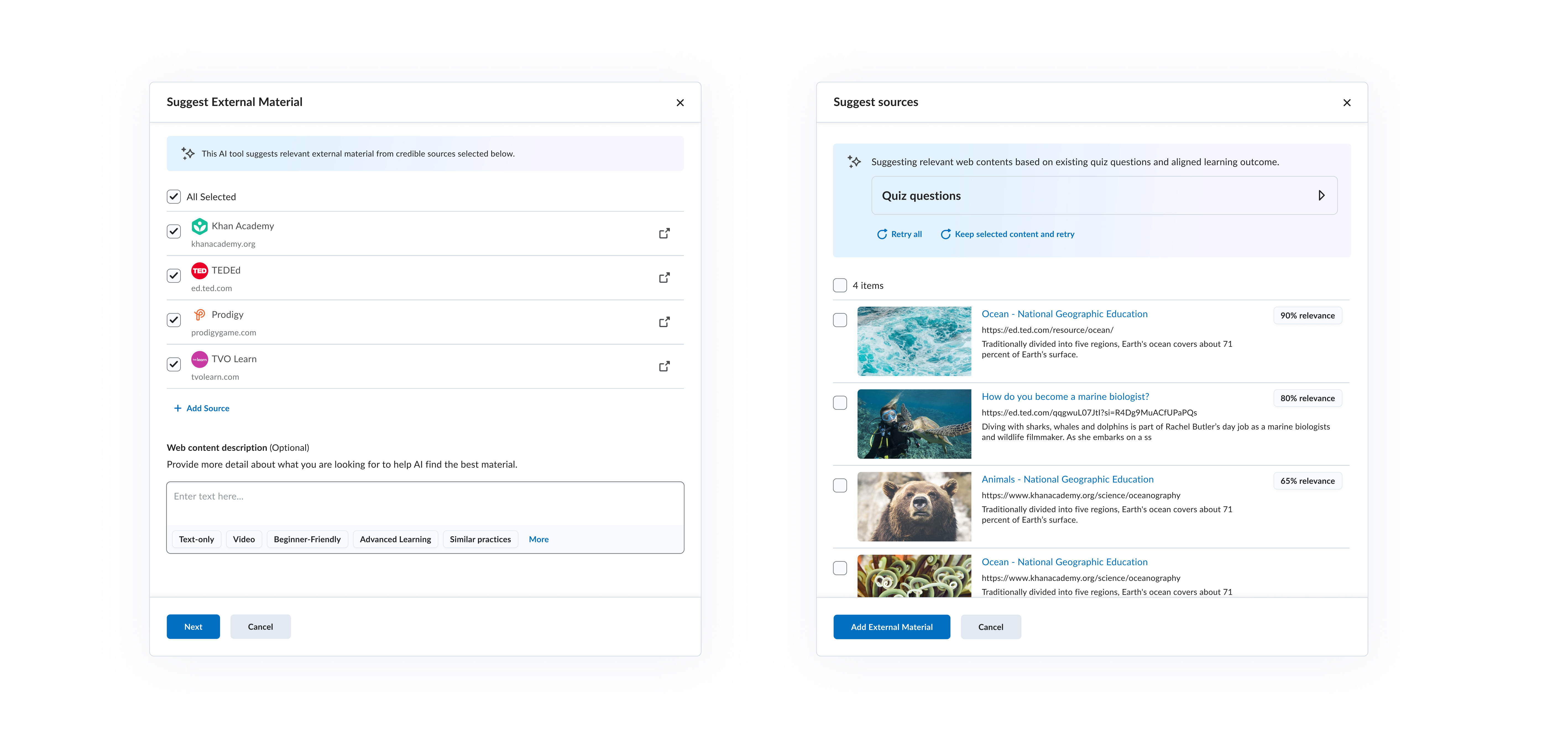

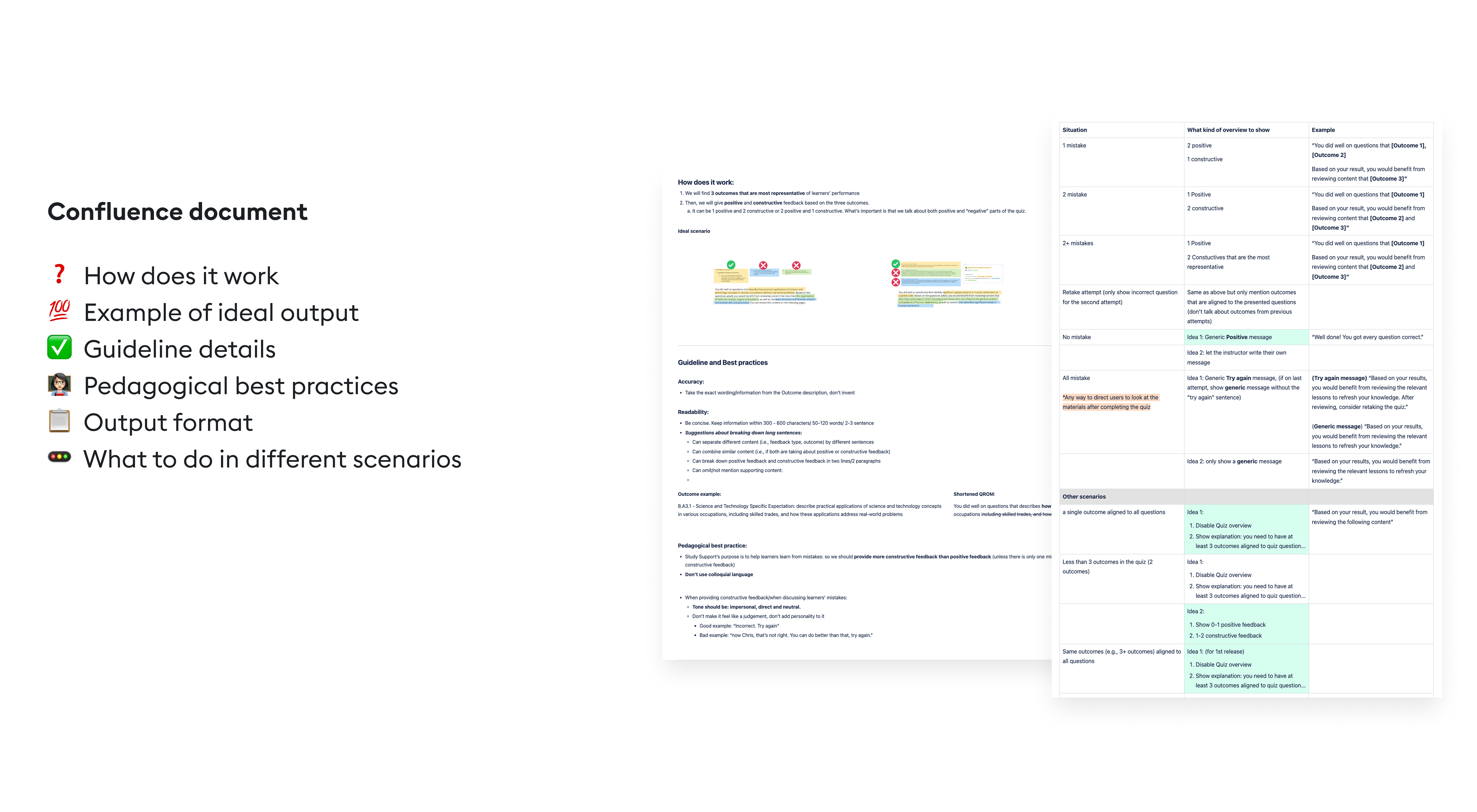

AI excels at analyzing vast amounts of information, understanding intent beyond keywords, and personalizing outputs. Meanwhile, our LMS houses all the instructor-created content, tracks every student interaction, and integrates with institutional learning outcomes.

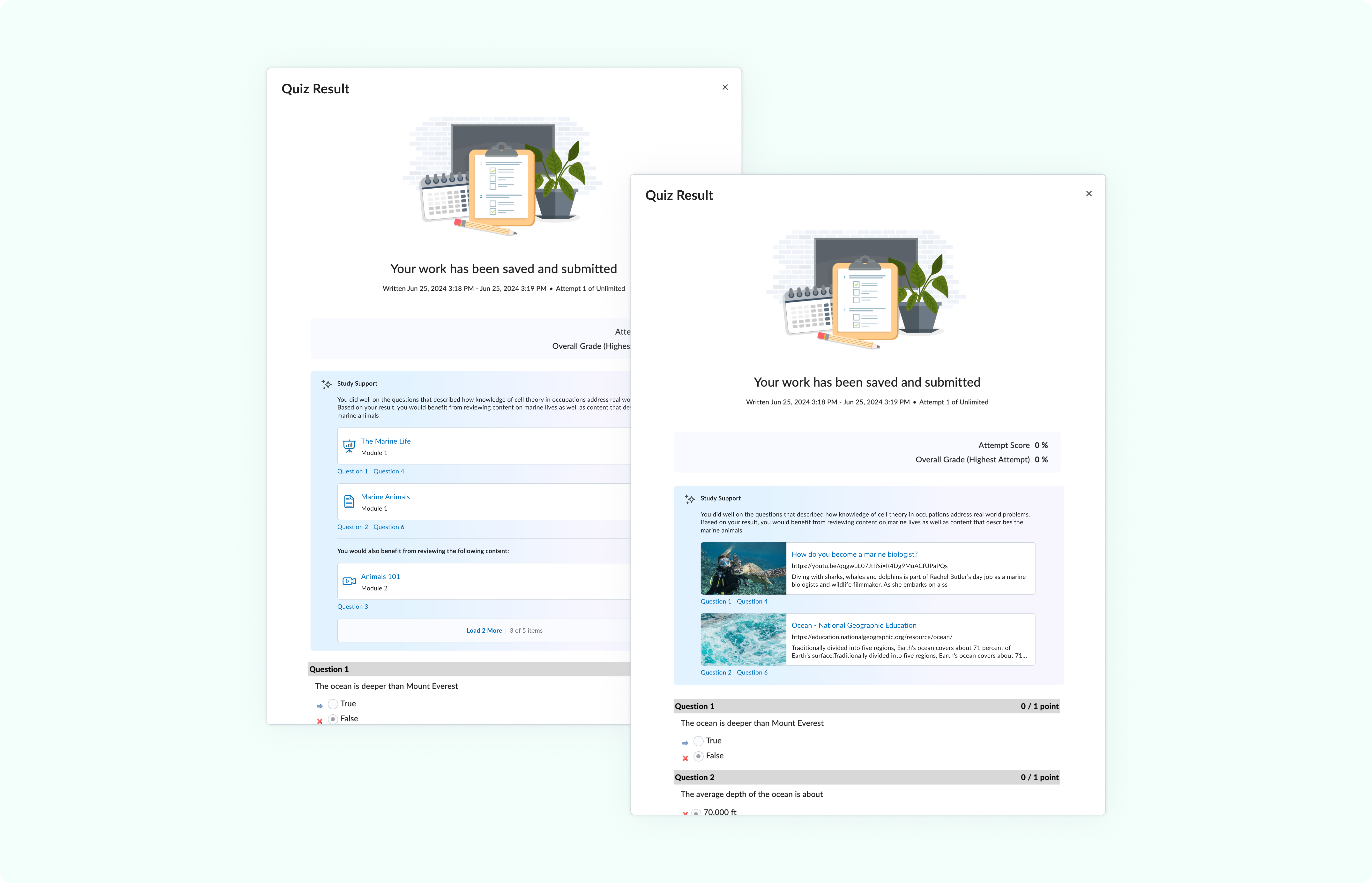

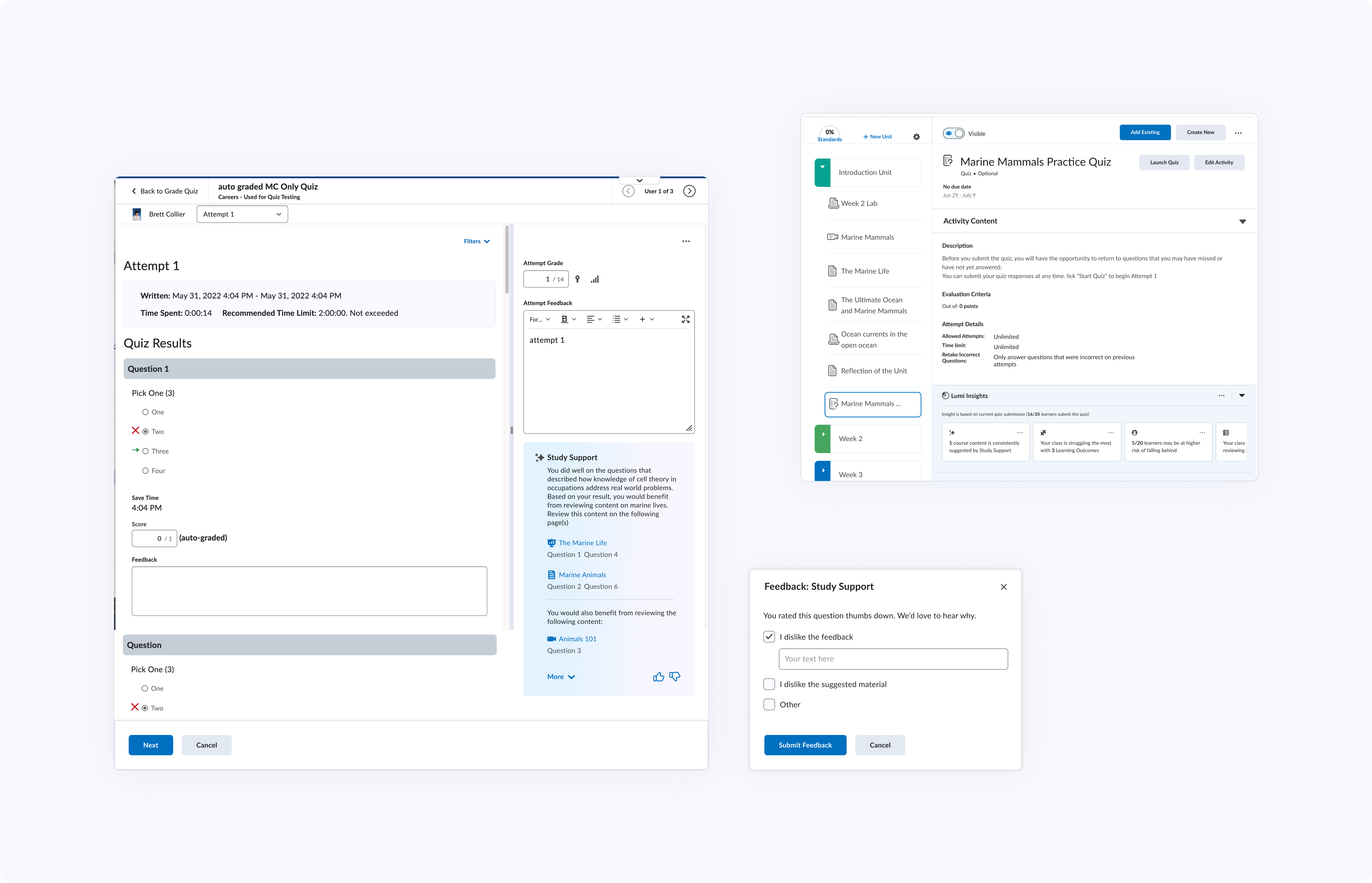

The intersection was our sweet spot. We could build something no standalone AI tool could replicate — provide personalized feedback based on actual performance data and suggest instructor validated content that helps learner close that knowledge gap.